←January→

| Sun |

Mon |

Tue |

Wed |

Thu |

Fri |

Sat |

| |

|

|

|

1 |

2 |

3 |

| 4 |

5 |

6 |

7 |

8 |

9 |

10 |

| 11 |

12 |

13 |

14 |

15 |

16 |

17 |

| 18 |

19 |

20 |

21 |

22 |

23 |

24 |

| 25 |

26 |

27 |

28 |

29 |

30 |

31 |

| ←2026→| Months |

|---|

| Jan | Feb |

Mar |

| Apr |

May |

Jun |

| Jul |

Aug |

Sep |

| Oct |

Nov |

Dec |

|

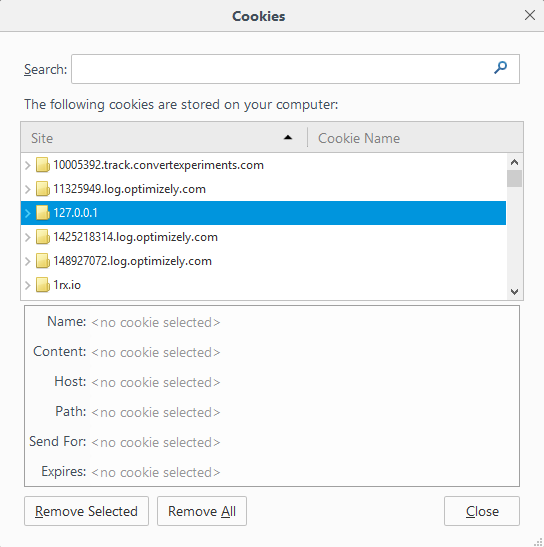

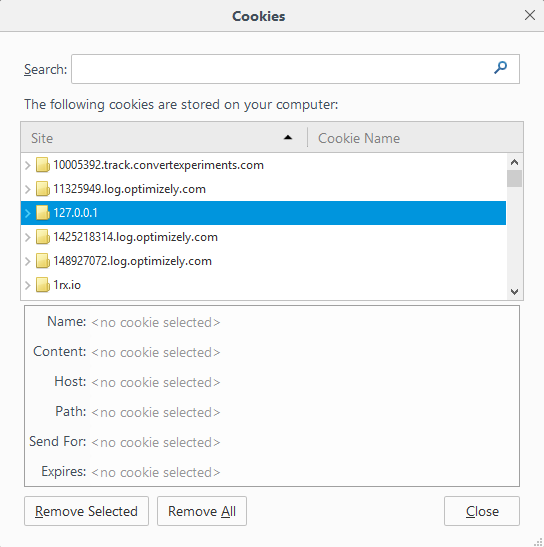

Sat, Apr 01, 2017 6:14 pm

Can't log into phpMyAdmin

I was unable to log into

phpMyAdmin from Firefox. Every time I entered the user

name and password, I would be presented with the login screen again. I

was able to resolve the problem by removing the coookies for the site

on which phpMyAdmin was running from within Firefox 52.0 by the following

process:

-

Click on the menu button at the top, right-hand corner of the Firefox

window - the one that has 3 horizontal bars - and select Options.

-

Select Privacy.

-

Click on the link under History for "remove individual cookies".

-

Click on the site on which phpMyAdmin is running to select that site,

then click on Remove Selected. Don't click on Remove All or

you will remove cookies for all sites.

-

Click on Close to close the cookies list window.

[/network/web/tools/phpmyadmin]

permanent link

Fri, Sep 09, 2016 9:58 pm

Benchmarking a website's performance with ab

You can benchmark a website's performance using the

AppleBench utility which is a tool available on Mac OS X

and Linux systems. The tool was originally developed to test

Apache web

servers, but can be used to test web servers running any web server

software. The tool will report the web server software that is in use on

the server being tested in a "Server Software" line in the output from the

tool.

On an OS X system, you can run it from a

Terminal window; the Terminal application is found in the

/Applications/Utilities directory by using the

ab command (man page) command.

To test a web site, e.g., example.com, you can issue a command in

the form ab http://example.com.

$ ab http://example.com/

This is ApacheBench, Version 2.3 <$Revision: 1663405 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking example.com (be patient).....done

Server Software: ECS

Server Hostname: example.com

Server Port: 80

Document Path: /

Document Length: 1270 bytes

Concurrency Level: 1

Time taken for tests: 0.042 seconds

Complete requests: 1

Failed requests: 0

Total transferred: 1622 bytes

HTML transferred: 1270 bytes

Requests per second: 23.96 [#/sec] (mean)

Time per request: 41.744 [ms] (mean)

Time per request: 41.744 [ms] (mean, across all concurrent requests)

Transfer rate: 37.95 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 19 19 0.0 19 19

Processing: 23 23 0.0 23 23

Waiting: 21 21 0.0 21 21

Total: 42 42 0.0 42 42

$[ More Info ]

[/network/web/tools]

permanent link

Sat, Jun 27, 2015 8:13 pm

You don't have permission to access /phpmyadmin on this server

When I tried to access

phpMyAdmin

on a CentOS 7 system running Apache web server software, I saw the message

below:

Forbidden

You don't have permission to access /phpmyadmin on this server.

I looked for phpmyadmin.conf, but couldn't find it on the

system, but then realized that I needed to use an uppercase "M" and "A"

# locate phpmyadmin.conf

# locate phpMyAdmin.conf

/etc/httpd/conf.d/phpMyAdmin.conf

I thought I had allowed access from all internal systems on the same

LAN to phpMyAdmin on the

webserver by modifying phpMyAdmin.conf to allow access from

the subnet on which the internal systems resided. I checked the configuration

file again and it appeared I had allowed access there.

<Directory /usr/share/phpMyAdmin/>

AddDefaultCharset UTF-8

<IfModule mod_authz_core.c>

# Apache 2.4

<RequireAny>

Require ip 127.0.0.1 192.168.0

Require ip ::1

</RequireAny>

</IfModule>

<IfModule !mod_authz_core.c>

# Apache 2.2

Order Deny,Allow

Deny from All

Allow from 127.0.0.1 192.168.0

Allow from ::1

</IfModule>

</Directory>

Since the internal systems were on a 192.168.0.0/24 subnet, I had

added 192.168.0 previously to the Require IP

and Allow from lines, so that access was allowed both from the

localhost address,

127.0.0.1, i.e., from the system itself, and from other systems on the LAN.

I knew I had done that quite some time ago and that the Apache webserver

had been restarted a number of times subsequent to that change.

I checked the IP address the server was seeing for the system from

which I had tried accessing it using

http://www.example.com/phpMyAdmin and realized it was seeing

the external IP address of the firewall behind which the webserver resides,

because I had used the

fully

qualified domain name (FQDN) for the server, i.e., www.example.com, which

caused the connectivity from the internal system to the web server to go

out through the firewall and back in. When I used the internal IP address

for the webserver on which phpMyAdmin resided with

http://192.168.0.22/phpMyAdmin, I was able to access the phpMyAdmin interface

from an internal system on the LAN on which it resides.

References:

-

Installing phpMyAdmin on a CentOS System Running Apache

Date: August 8, 2010

MoonPoint Support

[/network/web/tools/phpmyadmin]

permanent link

Wed, May 06, 2015 9:29 pm

Curl SSL certificate problem

When attempting to download a file via HTTPS from a website using curl, I

saw the error message "SSL3_GET_SERVER_CERTIFICATE:certificate verify failed".

$ curl -o whitelist.txt https://example.com/BLUECOAT/whitelist.txt

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0c

url: (60) SSL certificate problem, verify that the CA cert is OK. Details:

error:14090086:SSL routines:SSL3_GET_SERVER_CERTIFICATE:certificate verify fail

ed

More details here: http://curl.haxx.se/docs/sslcerts.html

curl performs SSL certificate verification by default, using a "bundle"

of Certificate Authority (CA) public keys (CA certs). If the default

bundle file isn't adequate, you can specify an alternate file

using the --cacert option.

If this HTTPS server uses a certificate signed by a CA represented in

the bundle, the certificate verification probably failed due to a

problem with the certificate (it might be expired, or the name might

not match the domain name in the URL).

If you'd like to turn off curl's verification of the certificate, use

the -k (or --insecure) option.When I added the -k option, I was able to download the file

successfully.

$ curl -o whitelist.txt -k https://example.com/BLUECOAT/whitelist.txt

But I wanted to know what the issue was with the

public key

certificate and I wanted to get that information from a Bash shell prompt.

You can get the certificate from a website using the command

openssl s_client -showcerts -connect fqdn:443, where

fqdn is the

fully qualified domain name for the website, e.g. example.com. Port

443 is the standard port used for HTTPS. The certificate should be stored as a

.pem file. When I used openssl s_client -showcerts -connect

example.com:443 >example.pem, I saw the message

"verify error:num=19:self signed certificate in certificate chain"

displayed, which revealed the source of the problem.

A self-signed

certificate is one that has been signed by the same entity whose

identity it certifies. For a site using a self-signed certificate, your

traffic to and from that site is protected from eavesdroppers along the path

of the traffic, but the certificate doesn't offer validation that the site

belongs to the entity claiming to own it. But, if you have other reasons to

trust the site or are only concerned about third parties eavesdropping on your

communications with the site, then a self-signed certificate may be adequate.

E.g., the site could be your own site or belong to someone or an entity you

know is in control of the website. Some organizations use self-signed

certificates for internal sites with the expectation that members/employees

will ignore browser warnings for the internal websites, though if people

become accustomed to ignoring such errors there is the danger that they

will also be more prone to ignore such warnings for external sites where

a site's true controlling entity isn't the one they expect.

$ openssl s_client -showcerts -connect example.com:443 >example.pem

depth=1 /C=US/ST=Maryland/L=Greenbelt/O=ACME/OU=EXAMPLE/CN=EXAMPLE CA

verify error:num=19:self signed certificate in certificate chain

verify return:0

read:errno=0

The s_client parameter uses a generic SSL/TLS client to

establish the connection to the server.

s_client This implements a generic SSL/TLS client which can establish

a transparent connection to a remote server speaking SSL/TLS.

It's intended for testing purposes only and provides only

rudimentary interface functionality but internally uses

mostly all functionality of the OpenSSL ssl library.

The certificate is stored in example.pem in this case. You

would need to edit the file to remove everything but the "BEGIN CERTIFICATE"

and "END CERTIFICATE" lines below and the lines that lie between those two

lines.

-----BEGIN CERTIFICATE-----

-----END CERTIFICATE-----

Or you can use a Bash script

retrieve_certifcate to obtain the certificate; it will stip off the

extraneous lines. The code for the script is shown below:

#!/bin/sh

#

# usage: retrieve-cert.sh remote.host.name [port]

#

REMHOST=$1

REMPORT=${2:-443}

echo |\

openssl s_client -connect ${REMHOST}:${REMPORT} 2>&1 |\

sed -ne '/-BEGIN CERTIFICATE-/,/-END CERTIFICATE-/p'

You can obtain information for the certificate from the PEM file

using the command openssl x509 -text -in example.pem. If

-issuer is appended, then only the issuer information will be

displayed, so I could see that the cerificate was self-signed with the

following command:

$ openssl x509 -noout -in example.pem -issuer

issuer= /C=US/ST=Maryland/L=Greenbelt/O=ACME/OU=EXAMPLE/CN=EXAMPLE CA

If you just want to verify the status of a certificate from the

command line without storing the certificate locally, you can add

the -verify 0 option.

-verify depth - turn on peer certificate verification

E.g.:

$ openssl s_client -showcerts -verify 0 -connect example.com:443

verify depth is 0

CONNECTED(00000003)

depth=1 /C=US/ST=Maryland/L=Greenbelt/O=ACME/OU=EXAMPLE/CN=EXAMPLE CA

verify error:num=19:self signed certificate in certificate chain

verify return:0

88361:error:14090086:SSL routines:SSL3_GET_SERVER_CERTIFICATE:certificate verify failed:/SourceCache/OpenSSL098/OpenSSL098-52.6.1/src/ssl/s3_clnt.c:998:

You can ignore all output from the command but the "verify error" line

with commands like the following:

$ openssl s_client -showcerts -verify 0 -connect example.com:443 2>&1 | grep "verify error"

verify error:num=19:self signed certificate in certificate chain

For another internal website, when I accessed the site in Firefox

with https://cmportal, Firefox reported the following:

This Connection Is Untrusted

You have asked Firefox to connect securely to cmportal,

but we can't confirm that your connection is secure.

Normally, when you try to connect securely, sites will present

trusted identification to prove that you are going to the right

place. However, this site's identity can't be verified.

What Should I Do?

If you usually connect to this site without problems, this error

could mean that someone is trying to impersonate the site, and you

shouldn't continue.

When I viewed the technical details for the certificate, Firefox informed

me that:

code760cmportal uses an invalid security certificate. The

certificate is only valid for the following names: 192.168.160.242,

servera.example.com (Error code: ssl_error_bad_cert_domain)

When I tried downloading the home page for the site with curl, I saw

the message below:

$ curl https://cmportal

curl: (51) SSL peer certificate or SSH remote key was not OK

I was able to get past that error with the -k or

--insecure parameter to curl, though then the page returned

reported I was being denied access to the requested web page due

to invalid credentials.

I downloaded the certificate for that site with openssl; since openssl

would wait for input after verify return:0, I used an

echo "" | to get it to complete.

$ echo "" | openssl s_client -showcerts -connect cmportal:443 >example.pem

depth=2 /C=US/O=Acme/OU=Anvils/OU=Certification Authorities/OU=Anvils Root CA

verify error:num=20:unable to get local issuer certificate

verify return:0

DONE

I removed all the lines before "BEGIN CERTIFICATE" and all those after

"END CERTIFICATE" and then checked the certificate for that .pem file with

the openssl command. That showed me a reference to servera

whereas I had accessed the site using cmportal..

$ openssl x509 -noout -in example.pem -subject

subject= /C=US/O=Acme/OU=Anvils/OU=Services/CN=servera.example.com

If you've accepted a self-signed certificate, or a certificate

with other issues, in Firefox, you can view the certificate following

the steps noted in Forgetting a certificate

in Firefox.

References:

-

Retrieving Password Protected Webpages Using HTTPS With Curl

Date: September 8, 2011

MoonPoint Support

-

How To Verify SSL Certificate From A Shell Prompt

Date: May 23, 2009

nixCraft

-

Example sites with broken security certs [closed]

Asked: November 9, 2009

Stack Overflow

-

Command line tool for fetching and analyzing SSL certificate

Asked: April 17, 2014

Server Fault

-

OpenSSL Command-Line HOWTO"

Published: June 13, 2004

Most recent revision: June 25, 2014

By: Paul Heinlein

madboa.com

-

x509 - Certificate display and signing utility

OpenSSL: The Open Source toolkit for SSL/TLS

[/network/web/tools/curl]

permanent link

Mon, Feb 16, 2015 9:26 pm

phpMyAdmin 4.3.6 on CentOS 7

When I tried accessing phpmyadmin on a CentOS 7 server running the Apache

webserver software using http://example.com/phpmyadmin, I received the message

below:

Forbidden

You don't have permission to access /phpmyadmin

on this server.

I got the same error if I tried using the IP address of the system instead

of example.com.

I could see the phpMyAdmin files on the system in /usr/share/phpMyAdmin and the rpm command showed

the package for it was installed on the system.

# rpm -qa | grep Admin

phpMyAdmin-4.3.6-1.el7.noarch

And when I logged into the web server, opened a browser, and pointed it

to http://localhost/phpmyadmin, I was able to get the phpMyAdmin login prompt.

I could also get to the setup page at http://localhost/phpmyadmin/setup. I

still received the "forbidden" error message if I tried the IP address of

the system in the address bar of the browser while logged into the system,

though.

I encountered the same error message about 4 years ago as noted in

Installing phpMyAdmin on a CentOS System Running Apache. In that

case my notes indicated I edited the phpmyadmin.conf file to add access

from an additional IP address. But when I looked for a phpadmin.conf

file on the current system, there was none to be found. After a little

further investigation, though, I found I should have been looking for

phpMyAdmin.conf rather than phpmyadmin.conf. I.e., I needed to look for a file

with a capital "M" and "A" in the file name.

# locate phpMyAdmin.conf

/etc/httpd/conf.d/phpMyAdmin.conf

I then added 192.168 after the instances of the

localhost address,

127.0.0.1, in the Directory /usr/share/phpMyAdmin/ section of

/etc/httpd/conf.d/phpMyAdmin.conf as shown

below, since the other systems on the LAN

had addresses in the 192.168.xxx.xxx range, so I could access

phpMyAdmin from any other system on the LAN.

<Directory /usr/share/phpMyAdmin/>

AddDefaultCharset UTF-8

<IfModule mod_authz_core.c>

# Apache 2.4

<RequireAny>

Require ip 127.0.0.1 192.168

Require ip ::1

</RequireAny>

</IfModule>

<IfModule !mod_authz_core.c>

# Apache 2.2

Order Deny,Allow

Deny from All

Allow from 127.0.0.1 192.168

Allow from ::1

</IfModule>

</Directory>

I then restarted the Apache web server software by running apachectl

restart from the root account. I was then able to access phpMyAdmin

using the internal IP address of the system, e.g.,

http://192.168.0.5/phpmyadmin, though http://example.com/phpmyadmin didn't work

because even though I was trying to access the server from a system on

the same LAN by using the

fully

qualified domain name (FQDN), I was then accessing the system by the

external address on the outside of the firewall/router it sits behind. But,

in this case, accessing it by IP address was sufficient.

[/network/web/tools/phpmyadmin]

permanent link

Sat, Aug 02, 2014 10:38 pm

phpMyAdmin SQL History

If you need to see a recent history of SQL commands you've run inside

phpMyAdmin, you can see recently entered commands by clicking on the

SQL icon, which is a box with "SQL" in red letters within it, that

occurs just below "phpMyAdmin" at the upper, left-hand side of the

phpMyAdmin window.

Once you click on that icon, another small window will pop up which

contains a tab labeled SQL history.

Click on that tab to see the recently entered SQL commands

[/network/web/tools/phpmyadmin]

permanent link

Fri, Mar 28, 2014 9:22 pm

Xenu Link Sleuth

When I checked the error log for this site this morning, I noticed an

entry pointing to a nonexistent file on the site, which led me to check

the Apache CustomLog file to look for information on why someone might

have followed a link to a file that never existed on the site. I didn't

discover the source of the incorrect link, but in the process of checking

for that incorrect link I found a very useful tool, Xenu Link Sleuth, that

revealed a signficant problem with the site due to a change I made this

morning and pointed out broken internal links on the site.

[ More Info ]

[/network/web/tools]

permanent link

Sun, Jan 06, 2013 2:48 pm

Using Firefox Cookies with Wget

If you need to use

wget to

access a site that relies on

HTTP cookies

to control access to the site, you can log into the site with Firefox and

use the Firefox add-on

Export Cookies to export all of the cookies stored by Firefox to a

file, e.g.

cookies.txt. After installing the add-on,

restart Firefox. You can then click on

Tools and choose

Export Cookies. Note: you may not get the cookie you need, if

you put Firefox in private browsing mode.

You can then use the cookies file you just exported with wget. E.g. presuming

the cookies file was named cookies.txt and was in the same

directory as wget, you could use the following:

wget --load-cookies=cookies.txt http://example.com/somepage.html

[/network/web/tools/wget]

permanent link

Sat, Sep 10, 2011 4:19 pm

Submitting a form with POST using cURL

I needed to submit a form on a webpage using cURL. The form submission

was using

POST

rather than GET. You can tell which method is being used

by examining the source code for a page containing a form. If POST is being

used, you will see it listed as the form method in the form tag as shown in

the example below. A form that uses GET, instead, would have "get" as the form

method.

<form method=post action=https://example.com/cgi-bin/SortDetail.pl>

You can use the -d or --data option with cURL

to use POST for a form submission.

-d/--data <data>

(HTTP) Sends the specified data in a POST request to the HTTP

server, in the same way that a browser does when a user has

filled in an HTML form and presses the submit button. This will

cause curl to pass the data to the server using the content-type

application/x-www-form-urlencoded. Compare to -F/--form.

-d/--data is the same as --data-ascii. To post data purely

binary, you should instead use the --data-binary option. To URL-

encode the value of a form field you may use --data-urlencode.

If any of these options is used more than once on the same com-

mand line, the data pieces specified will be merged together

with a separating &-symbol. Thus, using '-d name=daniel -d

skill=lousy' would generate a post chunk that looks like

'name=daniel&skill=lousy'.

If you start the data with the letter @, the rest should be a

file name to read the data from, or - if you want curl to read

the data from stdin. The contents of the file must already be

URL-encoded. Multiple files can also be specified. Posting data

from a file named 'foobar' would thus be done with --data @foo-

bar.

To submit the form using cURL, I used the following:

$ curl -u jsmith:SomePassword -d "Num=&Table=All&FY=&IP=&Project=&Service=&portNo=&result=request&display_number=Find+Requests" -o all.html https://example.com/cgi-bin/SortDetail.pl

In this case the website was password protected, so I had to use the

-u option to submit a userid and password in the form

-u userid:password. If you omit the :password

and just use -u userid, then cURL will prompt you for the password.

So, if you want to store the cURL command in a script, such as a

Bash script,

but don't want to store the password in the script, you can simply omit the

:password.

The -d option provides the parameters required by the

form and the values for those parameters, which were as follows in

this case:

| Parameter | Value |

|---|

| Num | |

| Table | All |

| FY | |

| IP | |

| Project | |

| Service | |

| portNo | |

| result | request |

| display_number | Find+Requests |

The format for submitting values for parameters is

parameter=value. Parameters are separated by an ampersand,

&.

URLs can only be sent over the Internet using the

ASCII character-set.

Special non ASCII characters, which include the space

character must be represented with a % followed by two

hexadecimal digits.

The space character can be represented by +

or by %20. So, though the value for "display_number" is

"Find Requests", it needs to be sent as Find+Requests or

Find%20 requests. You can see a list of other characters that

should be encoded at

URL Encoding.

In this case, I didn't need to specify values for many parameters in

the form, because I wanted the query to cover all potential values for

those parameters. So I can just use parameter= and

then follow that with an & to specify I am submitting the next

parameter in the list.

References:

-

cURL - Tutorial

cURL and libcurl

-

curl Examples

Linux Journal | Linux Tips

-

POST (HTTP)

Wikipedia, the free encyclopedia

-

The POST Method

James Marshall's Home Page

-

How to submit a form using PHP

HTML Form Guide - All about

web forms!

-

HTML URL Encoding

W3Schools Online Web Tutorials

-

URL Encoding

Bloo's Home Page

[/network/web/tools/curl]

permanent link

Thu, Sep 08, 2011 9:25 pm

Retrieving Password Protected Webpages Using HTTPS With Curl

Mac OS X

systems come with the

curl command

line tool which provides the capability to retrieve web pages from a

shell

prompt. To use the tool, using Finder on the system, you can go to

Applications,

Utilities and double-click on

Terminal

to obtain a shell prompt.

Curl is also available for a variety of other operating systems, including

DOS, Linux, and Windows. Versions for other operating systems can be obtained

from cURL - Download. If you

will be retrieving encrypted webpages using the HTTPs protocol, be sure

to get the binary version that includes

Secure Sockets

Layer (SSL) support.

A program with similar functionality is Wget, but that isn't

included by default with the current versions of the Mac OS X operating system.

On Mac OS X systems, curl is available in /usr/bin and help on

the options for curl can be found using man curl, curl -h

, curl --help, and curl --manual. An

online manual can be viewed at

cURL - Manual.

To retrieve a webpage that requires a userid and password for access

with curl using the HTTPS

protocol, you can use a command similar to the one below where userid

and password represent the userid and password required to access

that particular webpage.

curl -u userid:password https://example.com/somepage.html

If you don't want to include the password on the command line, you can

just specify the userid after the -u; curl will then prompt

you for the password.

$ curl -u jsmith https://example.com/somepage.html

Enter host password for user 'jsmith':

If you wish to save the output in a file rather than have it go to stdout, i.e.,

rather than have it appear on the screen, you can use the

-o/--output filename option where filename

is the name you wish to use for the output file. Curl will provide information

on the number of bytes downloaded and the time that it took to download a

webpage.

$ curl -u jsmith:somepassword -o somepage.html https://example.com/somepage.html

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 22924 0 22924 0 0 16308 0 --:--:-- 0:00:01 --:--:-- 26379References:

-

cURL and libcurl

[/network/web/tools/curl]

permanent link

Privacy Policy

Contact